Who will suffer if no one drives? If a driverless car exhibits any abnormalities, police will be faced with an unresolved problem.

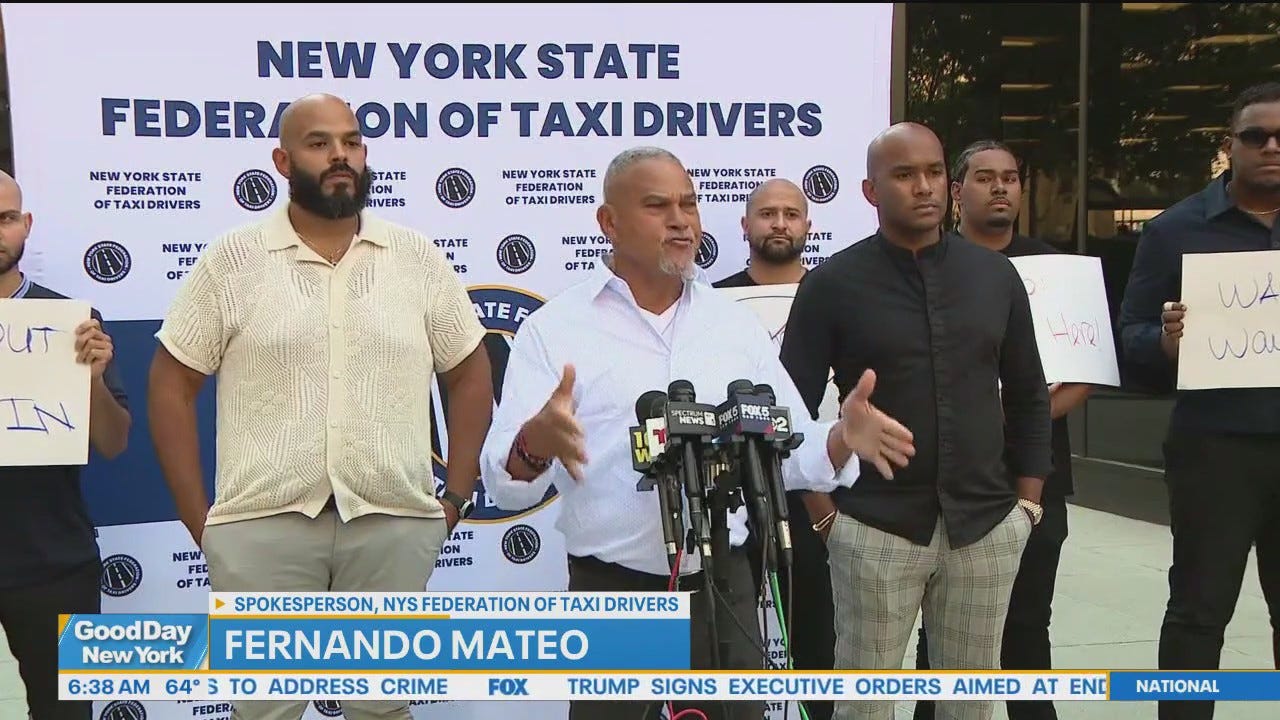

New York City taxi drivers protest Waymo

Drivers across the city held rallies to protest self-driving cars, saying the company is trying to “replace more than 200,000 drivers” in the city.

Fox – 5 New York

In September, a driverless taxi was caught on video illegally passing a school bus as children were being dropped off in Georgia. That same month, a self-driving car made an illegal U-turn in California and was stopped by a confused police officer. Later that month, a robot taxi ran over and killed a neighbor’s beloved cat in San Francisco.

A series of recent accidents and violations involving driverless cars has left law enforcement and state officials grappling with how to hold self-driving cars and their manufacturers accountable for their mistakes on the road.

As self-driving cars come under increasing scrutiny, one central question is emerging. That is, what happens if a self-driving car violates traffic laws.

Vehicles that do not require a human at the steering wheel are currently operating in California, Arizona, Texas, Nevada, and Georgia. Companies like Waymo and Tesla have also begun testing their vehicles in Florida.

Nationwide, more than half of states have passed laws allowing autonomous vehicle testing and public operation, but each jurisdiction appears to have different regulations, especially when it comes to traffic violations.

For example, Arizona and Texas have laws that allow police officers to cite self-driving cars for traffic violations in the same way they would if a person was behind the wheel. A new California law set to go into effect next year will allow police officers to issue notices, but it’s unclear whether there will be any penalties for filing paperwork.

The lack of similar laws in other states, including Georgia, has left police confused and lawmakers looking for ways to regulate the emerging technology.

As companies like Waymo, Tesla and Amazon’s Zoox seek to expand their self-driving car fleets, other states will also have to decide what regulations, if any, they will implement. And some safety experts say many will need to reconsider whether the laws already in place are too lenient.

Waymo is the largest manufacturer of robot taxis in the United States, with a fleet of more than 2,000 vehicles nationwide. The company says its taxis are designed to comply with traffic rules and that if an accident occurs, they can be stopped and held accountable.

“While we have seen several cases where local law enforcement agencies do not understand what their options and authority are, we can and do obtain and pay citations in the locations where we operate,” the company said in a statement to USA TODAY. “State regulators may also suspend AV operations for legitimate safety reasons.”

Some observers are concerned about the relationship between self-driving car companies and state governments tasked with keeping our roads safe.

“For all practical purposes, companies can do just about anything they want to do, and we just hope they have a strong enough appetite for safety,” said Phil Koopman, a professor at Carnegie Mellon University with decades of experience in self-driving car safety.

He added: “No state in the United States holds these companies fully accountable.”

“No driver, no manpower, no clue.”

In late September, the San Bruno Police Department in California’s Bay Area posted multiple images on social media of one of its officers looking into the open window of a Waymo driverless taxi.

The caption said that during a drunk-driving investigation, a police officer saw the Waymo make an illegal U-turn at a traffic light and stopped it. Waymo, owned by Google’s parent company Alphabet, says its vehicles are designed to stop when they detect police lights or sirens.

“That’s right…no driver, no people, no leads,” the police department said in a post about the incident. “Officers stopped the vehicle and contacted the company to inform them of the ‘malfunction’.”

The statement added: “We were unable to issue a ticket because there was no human driver (there is no ‘robot’ column in our citation book).”

The traffic stop made national headlines and became a prime example of the predicament police find themselves in when stopping self-driving cars. No one is behind the wheel. So who gets punished for mistakes?

Starting July 1, 2026, California police officers will be able to issue “violation notices” to self-driving car companies in connection with suspected traffic violations. But so far, the state has not said what the penalties associated with these notices will be, and experts are skeptical that they will have any impact on manufacturers.

“As far as I know, violation notices are of no use,” Koopman said. “If the school writes to mom and dad and they decide not to do anything, there are no consequences.”

What is a traffic ticket for a billion dollar company?

Even in states where police officers can charge companies with self-driving car traffic violations, such fees are just a drop in the bucket.

Koopman said tickets, which provide a written record of violations and a way to track them, are the beginning of accountability, but far from a solution.

“For these companies, a $100 traffic fine is not going to get much attention,” he says.

Meanwhile, police officials say tickets are rarely issued for robot taxis.

Phoenix was the first city where Waymo first launched robotaxis, and remains the main hub for the service. “I am not aware of any incidents where self-driving cars have been a topic of discussion,” Phoenix Police Department spokesman Brian Bower said in an email to USA TODAY.

In Atlanta, lawmakers vow to draft robotaxi regulations

In September, a Waymo taxi in Atlanta was recorded failing to stop for a school bus as children were being dropped off. The incident sparked an immediate backlash, with the National Highway Traffic Safety Administration launching an investigation into hundreds of the company’s self-driving cars.

Video of the incident shows a Waymo taxi moving around the school bus with its red lights flashing and its stop arm deployed. NHTSA said in a statement that Waymo taxis drive an average of 2 million miles per week, and given the heavy use of robotaxis, “it is likely that similar incidents have occurred before.”

Georgia, where the incident occurred, does not have a law that allows police to charge self-driving cars. But the video has sparked outrage among state lawmakers, some of whom have vowed to take action.

Republican state Sen. Rick Williams told KGW8 he plans to introduce legislation to hold self-driving car companies accountable. Ms. Williams was a sponsor of Addy’s Law, which introduced the possibility of jail time and fines for those who illegally pass school buses with flashing lights.

“Self-driving cars should be stopped until we figure this out,” he said, adding: “They shouldn’t be on the roads because they’re too dangerous for children.”

Georgia state Rep. Clint Crow, a co-sponsor of the Addies Act, said he wants companies to be penalized for self-driving car violations in the same way as regular drivers.

“I’m a big fan of new and emerging technologies…but we had to think about how they would comply with the law,” he told the outlet. “We’re going to have to really rethink who’s in charge, who’s responsible for controlling that vehicle, and who’s going to be driving that vehicle.”

How safe are self-driving cars?

Companies leading the self-driving revolution, including Waymo and Tesla, claim their vehicles are safer than cars driven by humans, but experts say there isn’t enough data to support such claims.

According to a study published in December by insurance giant Swiss Re, the Weymos had covered more than 25.3 million miles and had nine property damage claims and two personal injury claims. According to the study, the same number of miles driven would result in 78 property damage claims and 26 personal injury claims from human-driven vehicles.

But a study published by the RAND Corporation, a nonpartisan global think tank, found that self-driving cars would need to travel 275 million miles without failing before they could be considered as safe as humans. Waymo, which has the largest fleet of robot taxis, reached 100 million miles this summer.

Missy Cummings, a professor at George Mason University and an expert on self-driving car safety, cited the RAND study and said claims that self-driving cars are safer than humans are a “gross statistical exaggeration.”

“Humans drive trillions of miles every year,” she says. “[Autonomous vehicles]are in the low millions, so you can’t compare the two at this point.”

Regulators now face a new challenge: how to enable innovation while taking safety precautions into account. Cummings and Koopman told USA TODAY that self-driving cars will encounter different scenarios and be updated to improve. But as we have more self-driving cars across the country and more time spent driving in our communities, the likelihood of an accident will increase.

Companies themselves know this and are aware of this.

At a technology conference in San Francisco last month, a reporter asked Waymo co-CEO Tekedra Mawakana whether he thought society would “accept death that could be caused by robots.”

“I think society will,” Mawakana said, adding that the issue applies to all self-driving car companies, not just Waymo, according to SF Gate. “I think the challenge for us is to ensure that society has high enough safety standards that it imposes on businesses.”

Contributor: Elizabeth Wise